MeesterDaan (talk | contribs) |

MeesterDaan (talk | contribs) (→Clustering Coefficient & Average Path Length (optional)) |

||

| Line 43: | Line 43: | ||

|- | |- | ||

|valign="top"| | |valign="top"| | ||

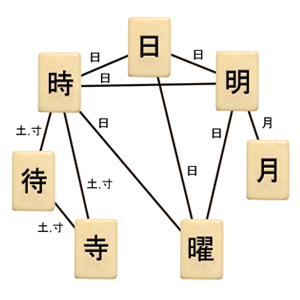

| − | First let's have a brief look at what makes a small-world network a small-world network, it's not all that difficult. First of all: it's about '''sparse networks''', that is, networks with relatively low | + | First let's have a brief look at what makes a small-world network a small-world network, it's not all that difficult. First of all: it's about '''sparse networks''', that is, networks with relatively low edge densities. Small-World networks have a '''high clustering coefficient'''. The clustering coefficient on a vertex is the fraction of connections between its neighbours. Vertex C has four neighbours. Between these four we have three edges, out of a possible six, so the clustering coefficient on C is 0.5. Similarly, the clustering coefficient on B is 1, and on F it is 0.33 if we disregard the two neighbours it must have outside the picture. If we don't disregard those, it has five neighbours with either one or two connections between them, so the clustering coefficient on F would be either 0.1 or 0.2. The cluster coefficient of the network is simply the average of all its vertices. |

Revision as of 10:01, 20 December 2017

Contents

Paper

I'm still working on this page, but our paper is here. I (Daan van den Berg) welcome all feedback you might have. Look me up in the UvA-directory, on LinkedIn or FaceBook.

Japanese Kanji Characters

|

The whole idea was quite simple actually, and born from the language enthousiasm of three programmers. Not an easy language of choice, Japanese sports an approximately 60,000-piece character set named Kanji. You only need to learn about 2,000 though to read Japanese, and as a serious study also involves writing, this proved a formidable exercise. But there is some systemacity; many Kanji consist of one or more components, which are a only 252 in number. Individual Kanji are said to carry meaning in a word-like manner, and akin to how compound words are built up ("swordfish", "snowball", "wonderland"), kanji often derive meaning from their combined constituent components, and are thus explained as such in textbooks for Japanese children. But the process of learning Japanese as a Dutch grown up is quite different from Japanese children. Some of the "combined-component-meaning just seemed unlogical.

|

Kanjis & Small-Worlds

|

The network of connected Kanji turned out to be a small-world network, which means it has a high clustering coefficient, a low average path length and a low connection density. As we found out soon enough, computational linguists had already found a large number of small-worlds on various levels in different languages, but this network of Kanji sharing components was not ye one of them. As such, it neatly lined up with existing research in quantitative linguistics and was accepted as a valuable addition.

|

The functional connectivity graph of the brain is one of many well known small-worlds See Sporns & Honey (2006) and others. Image adapted from Sporns' book "Discovering the Human Connectome" |

Clustering Coefficient & Average Path Length (optional)

|

First let's have a brief look at what makes a small-world network a small-world network, it's not all that difficult. First of all: it's about sparse networks, that is, networks with relatively low edge densities. Small-World networks have a high clustering coefficient. The clustering coefficient on a vertex is the fraction of connections between its neighbours. Vertex C has four neighbours. Between these four we have three edges, out of a possible six, so the clustering coefficient on C is 0.5. Similarly, the clustering coefficient on B is 1, and on F it is 0.33 if we disregard the two neighbours it must have outside the picture. If we don't disregard those, it has five neighbours with either one or two connections between them, so the clustering coefficient on F would be either 0.1 or 0.2. The cluster coefficient of the network is simply the average of all its vertices.

|

Gelb's Hypothesis: from pictures to sounds

|

So many language networks have small-world properties. But for Japanese, there's a little more to it. Because as it turns out, there's an old conjecture by Igancy Jay Gelb (19xx-19xx) and it appears to coincide with our findings quite neatly. An American linguist from Polish descent, Gelb hypothesized that all written languages go through a phase transition from being picture-based to being sound-based. Studied deeply, this idea is quite coarse and the exact trajectory might differ from language to language, but the core concept remains quite alluring in any form, especially where it concerns Japanese Kanji.

|

Circumstantial Evidence

|

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Lobortis mattis aliquam faucibus purus in. Non enim praesent elementum facilisis leo vel. Lacus vestibulum sed arcu non odio. Vivamus at augue eget arcu dictum varius duis at. Nulla facilisi morbi tempus iaculis urna id volutpat. Libero id faucibus nisl tincidunt eget nullam. At ultrices mi tempus imperdiet nulla malesuada pellentesque elit. Ac felis donec et odio pellentesque. Mollis aliquam ut porttitor leo a diam sollicitudin tempor. Sit amet nulla facilisi morbi tempus iaculis. Leo in vitae turpis massa sed elementum tempus egestas sed. Nam at lectus urna duis. Imperdiet massa tincidunt nunc pulvinar sapien et ligula. Vestibulum mattis ullamcorper velit sed ullamcorper morbi. Magna fermentum iaculis eu non diam phasellus vestibulum lorem. Ut tristique et egestas quis ipsum suspendisse. Aenean sed adipiscing diam donec. At in tellus integer feugiat scelerisque varius morbi. Massa massa ultricies mi quis. Duis at consectetur lorem donec. Ut placerat orci nulla pellentesque dignissim. Urna nunc id cursus metus aliquam. Odio euismod lacinia at quis risus sed. Convallis tellus id interdum velit laoreet. Lacinia quis vel eros donec ac odio tempor. Commodo viverra maecenas accumsan lacus vel. Nam libero justo laoreet sit amet cursus. Pellentesque massa placerat duis ultricies. Tristique sollicitudin nibh sit amet commodo. Et pharetra pharetra massa massa ultricies mi. Mollis nunc sed id semper risus. Est pellentesque elit ullamcorper dignissim cras. Faucibus purus in massa tempor nec feugiat.

|

Whoswho&where

|

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Lobortis mattis aliquam faucibus purus in. Non enim praesent elementum facilisis leo vel. Lacus vestibulum sed arcu non odio. Vivamus at augue eget arcu dictum varius duis at. Nulla facilisi morbi tempus iaculis urna id volutpat. Libero id faucibus nisl tincidunt eget nullam. At ultrices mi tempus imperdiet nulla malesuada pellentesque elit. Ac felis donec et odio pellentesque. Mollis aliquam ut porttitor leo a diam sollicitudin tempor. Sit amet nulla facilisi morbi tempus iaculis. Leo in vitae turpis massa sed elementum tempus egestas sed. Nam at lectus urna duis. Imperdiet massa tincidunt nunc pulvinar sapien et ligula. Vestibulum mattis ullamcorper velit sed ullamcorper morbi. Magna fermentum iaculis eu non diam phasellus vestibulum lorem. Ut tristique et egestas quis ipsum suspendisse. Aenean sed adipiscing diam donec. At in tellus integer feugiat scelerisque varius morbi. Massa massa ultricies mi quis. Duis at consectetur lorem donec. Ut placerat orci nulla pellentesque dignissim. Urna nunc id cursus metus aliquam. Odio euismod lacinia at quis risus sed. Convallis tellus id interdum velit laoreet. Lacinia quis vel eros donec ac odio tempor. Commodo viverra maecenas accumsan lacus vel. Nam libero justo laoreet sit amet cursus. Pellentesque massa placerat duis ultricies. Tristique sollicitudin nibh sit amet commodo. Et pharetra pharetra massa massa ultricies mi. Mollis nunc sed id semper risus. Est pellentesque elit ullamcorper dignissim cras. Faucibus purus in massa tempor nec feugiat.

|

File:Abcdefgh.jpg onderschriftonderschriftonderschriftonderschriftonderschriftonderschriftonderschriftonderschriftonderschriftonderschriftonderschrift |

Small-World Networks

|

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Lobortis mattis aliquam faucibus purus in. Non enim praesent elementum facilisis leo vel. Lacus vestibulum sed arcu non odio. Vivamus at augue eget arcu dictum varius duis at. Nulla facilisi morbi tempus iaculis urna id volutpat. Libero id faucibus nisl tincidunt eget nullam. At ultrices mi tempus imperdiet nulla malesuada pellentesque elit. Ac felis donec et odio pellentesque. Mollis aliquam ut porttitor leo a diam sollicitudin tempor. Sit amet nulla facilisi morbi tempus iaculis. Leo in vitae turpis massa sed elementum tempus egestas sed. Nam at lectus urna duis. Imperdiet massa tincidunt nunc pulvinar sapien et ligula. Vestibulum mattis ullamcorper velit sed ullamcorper morbi. Magna fermentum iaculis eu non diam phasellus vestibulum lorem. Ut tristique et egestas quis ipsum suspendisse. Aenean sed adipiscing diam donec. At in tellus integer feugiat scelerisque varius morbi. Massa massa ultricies mi quis. Duis at consectetur lorem donec. Ut placerat orci nulla pellentesque dignissim. Urna nunc id cursus metus aliquam. Odio euismod lacinia at quis risus sed. Convallis tellus id interdum velit laoreet. Lacinia quis vel eros donec ac odio tempor. Commodo viverra maecenas accumsan lacus vel. Nam libero justo laoreet sit amet cursus. Pellentesque massa placerat duis ultricies. Tristique sollicitudin nibh sit amet commodo. Et pharetra pharetra massa massa ultricies mi. Mollis nunc sed id semper risus. Est pellentesque elit ullamcorper dignissim cras. Faucibus purus in massa tempor nec feugiat. |

File:Abcdefgh.jpg onderschriftonderschriftonderschriftonderschriftonderschriftonderschriftonderschriftonderschriftonderschriftonderschriftonderschrift |

More

Maybe later.